Multitrack Post Production Algorithms

An Auphonic Multitrack post production takes multiple parallel input audio tracks/files, analyzes and processes them individually as well as combined and creates the final mixdown automatically.

Leveling, dynamic range compression, gating, noise and hum reduction, crosstalk removal, ducking and filtering can be applied automatically according to the analysis of each track.

Loudness normalization and true peak limiting is used on the final mixdown.

All algorithms were trained with data from our web service and they keep learning and adapting to new audio signals every day.

Auphonic Multitrack is most suitable for programs, where dialogs/speech is the most prominent content: podcasts, radio, broadcast, lecture and conference recordings, film and videos, screencasts etc.

It is not built for music-only productions.

Audio examples with detailed explanations what our algorithms are doing can be found at:

https://auphonic.com/features/multitrack

Warning

Please read the Multitrack Best Practice before using our multitrack algorithms!

Multitrack Adaptive Leveler

Adaptive Leveler in Master Track:

Leveling Parameters in Individual Tracks:

Similar to our singletrack Adaptive Leveler, the Multitrack Advanced and Adaptive Levelers correct level differences between tracks, speakers, music and speech within one audio production.

Using the knowledge from signals of all tracks allows us to produce much better results compared to our singletrack version.

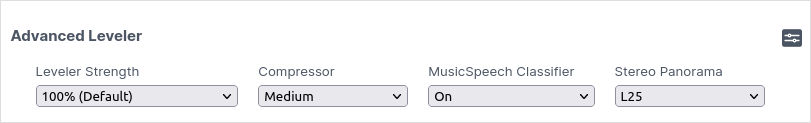

The Multitrack Advanced Leveler analyses which parts of each audio track should be leveled, how much they should be leveled, and how much dynamic range compression should be applied to achieve a balanced overall loudness in the final master track.

For all parameters please see Multitrack Advanced Leveler Settings

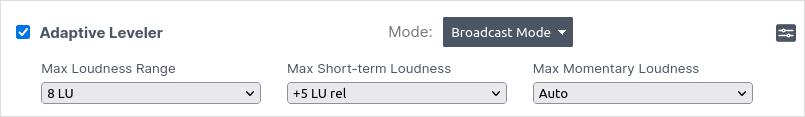

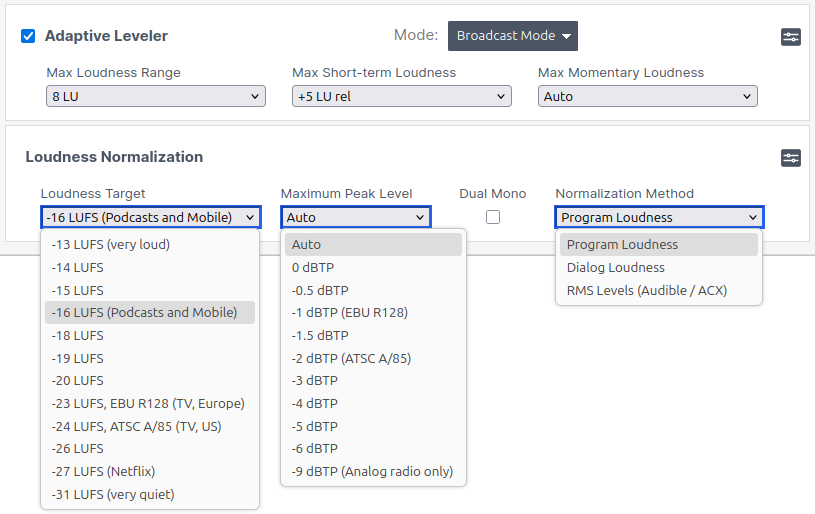

In addition to these track specific parameters, you can switch the Multitrack Adaptive Leveler in the Master Track Audio Algorithms to Broadcast Mode and therewith control the combined leveling strength. Volume changes of our leveling algorithms will be adjusted, so that the final mixdown of the multitrack production meets the given MaxLRA, MaxS, or MaxM target values – as it is done in the Singletrack Broadcast Mode.

For all parameters please see Multitrack Adaptive Leveler Settings

We analyze all input audio tracks to classify speech, music and background segments and process them individually:

We know exactly which speaker is active in which track and can therefore produce a balanced loudness between tracks.

Loudness variations within one track (changing microphone distance, quiet speakers, different songs in music tracks etc.) are corrected.

Dynamic range compression is applied on each speech track automatically (see also Loudness Normalization and Compression). Music segments are kept as natural as possible.

Background segments (noise, wind, breaths, silence etc.) are classified and won’t be amplified. (see also Automatic Ducking, Foreground and Background Tracks)

Each track is processed with your selected stereo panorama factor to create a stereo mix with a spatial impression.

Annotated Multitrack Adaptive Leveler Audio Examples:

Please listen to our Multitrack Audio Example 1, Multitrack Audio Example 3 and the Singletrack Adaptive Leveler Audio Examples.

They include detailed annotations to illustrate what our algorithms are doing!

Adaptive Noise Gate

If audio is recorded from multiple microphones and all signals are mixed, the noise of all tracks will add up as well. The Adaptive Noise Gate decreases the volume of segments where a speaker is inactive, but does not change segments where a speaker is active.

This results in much less noise in the final mixdown.

Our classifiers know exactly which speaker or music segment is active in which track. Therefore we can set all parameters of the Gate / Expander (threshold, ratio, sustain, etc.) automatically according to the current context.

Annotated Adaptive Noise Gate Audio Examples:

Please listen to our Multitrack Audio Example 2 and the other Multitrack Audio Examples.

They include detailed annotations to illustrate what our algorithms are doing!

Remove Mic Bleed

When recording multiple people with multiple microphones in one room, the voice of speaker 1 will also be recorded in the microphone of speaker 2. This Bleed / Spill / Crosstalk results in a reverb or echo-like effect in the final audio mixdown.

If you try to correct that using a Noise Gate / Expander, it is very difficult to set the correct parameters, because the bleed might be very loud.

Our multitrack algorithms know exactly when and in which track a speaker is active and can therefore remove the same or correlated signals (= bleed) from all other tracks. This results in a more direct signal and decreases ambience and reverb.

Annotated Bleed Removal Audio Examples:

Please listen to our Multitrack Audio Example 4 and the other Multitrack Audio Examples.

They include detailed annotations to illustrate what our algorithms are doing!

Noise and Reverb Reduction

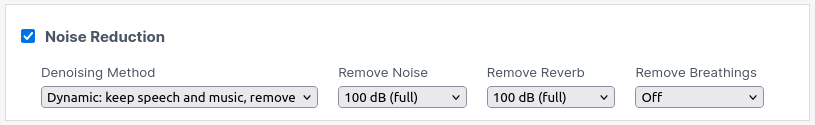

In the Auphonic Web Service, you can choose from four methods to define which kind of noise you want to reduce:

The Static Denoiser removes reverb and stationary, technical noises, while our Dynamic Denoiser removes everything but voice and music. Select Speech Isolation if you only want to keep speech, so all noise and even music will be removed. The Classic Denoiser also removes static noise, but it works only if there is a short silent segment, where a noise print can be extracted.

You can specify your favorite denoising algorithms for every individual track. Therefore, multitrack noise reduction algorithms can produce much better results compared to our singletrack Noise and Reverb Reduction algorithms.

A possible setup could be:

Track1: static denoiser for speaker1 to keep sound effect and remove static hiss and hum

Track2: dynamic denoiser for speaker2 to keep music jingles but remove construction noises

Track3: speech isolation for speaker3 to remove background chatter, wind noises and music

Track4: no denoiser for pure music track without noise

For maximum flexibility, with Speech Isolation, Dynamic Denoiser and Static Denoiser, you can also separately control the reduction amounts for each track:

Remove Noise: Reduce this amount, if you don’t want to completely eliminate the noise, but keep some atmosphere.

Remove Reverb: Reduce this amount to quietly keep the reverberation for a spatial impression.

Remove Breaths: Reduce this amount to keep breath sounds in your breath meditation recording. (not available for Static Denoiser)

These parameters allow you to increase the speech intelligibility while preserving a natural feel if desired.

For more details about all available parameters, please see Noise Reduction Settings.

Annotated Multitrack Noise Reduction Audio Examples:

Please listen to our Multitrack Audio Examples

and Noise Reduction Audio Examples.

Multitrack Dynamic Denoiser

The Dynamic Denoiser preserves speech and music signals but removes everything else from your audio or video file. It is perfectly used with tracks, that contain fast-changing and complex noises in situations like:

Crowded Places: Chatter and noises from people in the background.

Outdoor Recordings: Clean up background noises like wind or traffic.

Breakfast Interview: Get rid of mouth noises from eating during a recording session.

Large rooms: Eliminate echo and reverberation.

Enthusiastic Speech: Remove unwanted inhalation and exhalation sounds.

During the analysis of an audio signal, there is metadata generated, classifying contents such as spoken word, music in the foreground or background, different types of noises, silence, and more. Based on this metadata, every selected track is processed with AI algorithms, that are permanently changing over time, to apply the best matching settings for every tiny segment of your audio. These adaptive noise reduction algorithms take care, that a consistent, high sound quality is produced throughout the entire recording while removing as many unwanted noises as possible.

Please pay attention not to use the Dynamic Denoiser, if you want to keep sound effects or ambient sounds in any kind of audio play content!

Multitrack Speech Isolation

Our Speech Isolation algorithms do only isolate speech, but remove everything else, including music, from your audio or video file. It is perfectly used for speech tracks containing music and fast-changing, complex noises. For example:

Videos: Increase the speech intelligibility by reducing or completely removing the background music of your video.

Bar Interview: Silence music, clinking glasses, and chatter from the background.

Home Recordings: Clean up noise from your neighbor rumbling and practicing piano.

During the analysis of an audio signal, there is metadata generated, classifying contents such as spoken word, music in the foreground or background, different types of noises, silence, and more. Based on this metadata, every selected track is processed separately with AI algorithms, that are permanently changing over time, to apply the best matching settings for every tiny segment of your audio. These adaptive noise reduction algorithms take care, that a consistent, high sound quality is produced throughout the entire recording while removing as many unwanted music and noises as possible.

Please pay attention not to use Speech Isolation algorithms, if you want to keep jingles, sound effects, or ambient sounds in any kind of audio play content!

Multitrack Static Denoiser

The (New) Static Denoiser algorithm removes reverb and slowly varying broadband background noise from audio files even when music is in the mix. Static denoising is the perfect choice for any kind of audio drama or guided music meditation, where you want to keep music, sound effects or ambient sounds, like a rainforest soundscape.

In contrast to the Classic Denoiser, which extracts and subtracts noise profiles from silent segments of your audio, the Static Denoiser uses AI algorithms for noise detection and can thus also remove static noise from recordings with continuous music.

Per default the Static Denoiser is set to full reverb and static noise reduction. But you can, for instance, also manually set the parameter Remove Reverb to a lower value, if you prefer to keep some reverb.

However, be aware that you can not remove any non-static noises (e.g. vinyl crackling) or breath sounds with the Static Denoiser. If you want that, you might consider using the Dynamic Denoise or the Speech Isolation methods.

Multitrack Classic Denoiser

Our Classic Denoiser (former version of our Static Denoiser) algorithms only remove broadband background noise and hum from audio files with slowly varying backgrounds when there is no music. For the Classic Denoiser to work, the input audio must have a silent segment from which a noise profile can be extracted. Therefore, it should only be used for any kind of audio play content with broadband hiss and hum but no music, where you want to keep sound effects or ambient sounds, like a singing bird in your ornithology podcast.

First, the audio of each selected track is analyzed and segmented into regions with different background noise characteristics, and subsequently, Noise Prints are extracted for each region.

Per default, there is also Hum Reduction activated with the Classic Denoiser. If any hum is present in the recording, the hum base frequency (50Hz or 60Hz) and the strength of all its partials (100Hz, 150Hz, 200Hz, 250Hz, etc.) are also identified for each region.

Based on this metadata of all analyzed audio regions, our classifier finally decides how much noise and hum reduction is needed in each region and automatically removes the noise and hum from the audio signal of every selected track.

You can also manually set the parameter Remove Noise for every individual track, if you prefer more noise reduction. However, be aware that high noise reduction amounts might result in artifacts!

For best results, please also find our Usage Tips for Classic Denoiser.

Automatic Ducking, Foreground and Background Tracks

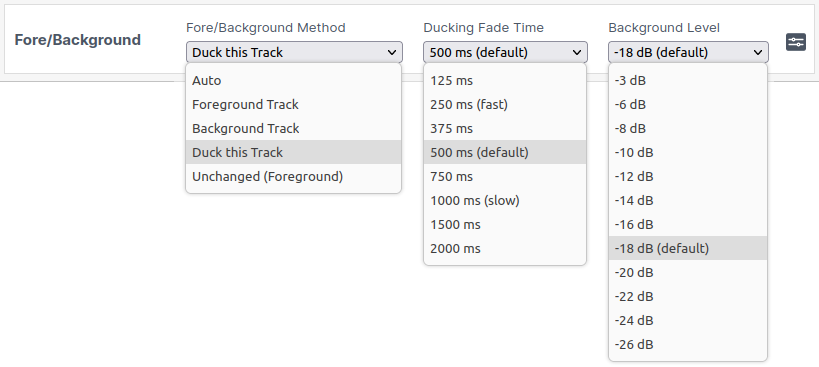

The parameter Fore/Background controls whether a track should be in foreground, in background, ducked, or unchanged.

Ducking automatically reduces the level of a track if speakers in other tracks are active. This is useful for intros/outros, translated speech, or for music, which should be softer if someone is speaking.

If Fore/Background is set to Auto, Auphonic automatically analyzes each segment of your track and decides if it should be in foreground or background:

Speech tracks will always be in foreground.

In music tracks, all segments/clips (songs, intros, etc.) are classified as background or foreground segments and are mixed to the production accordingly. Each clip will be leveled individually, therefore all relative loudness differences between clips in this track will be lost.

The automatic fore/background classification should work most of the time.

However, in special or artistic productions, like a very complex background music track or if you do the ducking and all relative level changes manually in your audio editor, you can force the track to be in foreground, in background, or unchanged:

Background: Each segment/clip of the track is mixed to be in background (softer compared to foreground speech).

Foreground: The track is mixed to be in foreground (same level as foreground speech).

Each segment/clip (songs, intros, etc.) of the track will be leveled individually, therefore all relative loudness differences between clips in this track will be lost.Unchanged (Foreground): Level relations within this track won’t be changed at all.

It will be added to the final mixdown so that foreground/solo parts of this track will be as loud as (foreground) speech from other tracks.

See also How should I set the Parameter Fore/Background.

Annotated Multitrack Fore/Background/Ducking Audio Examples:

Please listen to the Automatic Ducking Audio Example and to the Fore/Background Example.

They include detailed annotations to illustrate what our algorithms are doing!

Adaptive Filtering

Multitrack High-Pass Filtering

Our adaptive High-Pass Filtering algorithm cuts disturbing low frequencies and interferences, depending on the context of each track.

First we classify the lowest wanted signal in every track segment: male/female speech base frequency, frequency range of music (e.g. lowest base frequency), noise, etc. Then all unnecessary low frequencies are removed adaptively in every audio segment, so that interferences are removed but the overall sound of the track is preserved.

We use zero-phase (linear) filtering algorithms to avoid asymmetric waveforms: in asymmetric waveforms, the positive and negative amplitude values are disproportionate - please see Asymmetric Waveforms: Should You Be Concerned?.

Asymmetrical waveforms are quite natural and not necessarily a problem. They are particularly common on recordings of speech, vocals and can be caused by low-end filtering. However, they limit the amount of gain that can be safely applied without introducing distortion or clipping due to aggressive limiting.

Multitrack Voice AutoEQ Filtering

The AutoEQ (Automatic Equalization) automatically analyzes and optimizes the frequency spectrum of a voice recording, to avoid speech that sounds very sharp, muddy, or otherwise unpleasant.

Using Auphonic AutoEQ, spectral EQ profiles are created for each track and each speaker separately and permanently changing over time. The aim of those time-dependent EQ profiles is to create a constant, warm, and pleasant sound in the output file even if there are slightly changing voices within a track, for example, due to modified speaker-microphone positions.

Plosive reduction (De-Plosive) to lower strong ‘ppp’ or ‘ttt’ pop-sounds in speech, is built into both the AutoEQ and the Noise Reduction algorithms. De-Plosive is applied when AutoEQ is enabled on its own, or either “Dynamic Denoiser” or “Speech Isolation” are active. For the most effective plosive removal, use AutoEQ together with one of the mentioned Denoising Methods, as both algorithms contribute to reducing plosives. Note that De-Plosive is not applied when only the “Classic” or “Static Denoiser” and “Adaptive high-pass filtering” is used.

For more details, please also read our blog post on Auphonic AutoEQ Filtering.

Multitrack Bandwidth Extension

Voice AutoEQ + Bandwidth extension (BWE) artificially recreates higher audio frequencies that may have been lost or limited during recording, transmission, or compression. By analyzing the existing frequency information in an audio signal, our algorithm can predict and synthesize additional higher-frequency components. This approach restores the brightness and clarity of muffled, low-quality audio in voice calls or old recordings to make it sound more natural and pleasant to listeners.

The Auphonic Bandwidth Extension always includes the Voice AutoEQ and is similarly designed specifically for voice enhancement without affecting your carefully chosen music and without emphasizing noise, reverb or other environmental sounds.

For more details, please also see our blog post on Auphonic Bandwidth Extension.

Loudness Specifications and True Peak Limiter

Loudness Normalization and a True Peak Limiter can be applied after the final mixdown of all individual tracks.

Our Loudness Normalization Algorithms calculate the loudness of your audio and apply a constant gain to reach a defined target level in LUFS (Loudness Units relative to Full Scale) or RMS, so that multiple processed files have the same average loudness.

In combination with parameters of our Adaptive Leveler, you can define a set of parameters like integrated loudness target, maximum true peak level, MaxLRA, MaxM, MaxS, dialog normalization, ect., which are described in detail in Audio Algorithms for Master Track.

The loudness is calculated according to the latest broadcast standards, so you never have to worry about admission criteria for different platforms or broadcasters again:

Auphonic supports loudness targets for television (EBU R128, ATSC A/85), radio and mobile (-16 LUFS: Apple Music, Google, AES Recommendation), Amazon Alexa, YouTube, Spotify, Tidal (-14 LUFS), Netflix (-27 LUFS), Audible / ACX audiobook specs (-18 to -23 db RMS) and more.

For more detailed information, please see our articles about: Audio Loudness Measurement and Normalization, Loudness Targets for Mobile Audio, Podcasts, Radio and TV, The New Loudness Target War, and RMS-based Loudness Normalization for Audible / ACX.

A True Peak Limiter, with 4x oversampling to avoid intersample peaks, is used to limit the final output signal and ensures compliance with the selected loudness standard.

We use a multi-pass loudness normalization strategy based on statistics from processed files on our servers, to more precisely match the target loudness and to avoid additional processing steps.

NOTE:

If you export the individual, processed tracks of a Multitrack Production (for further editing), they are not loudness normalized yet!

The loudness normalization must always be the last step in the processing chain, after the final mixdown and all other adjustments!

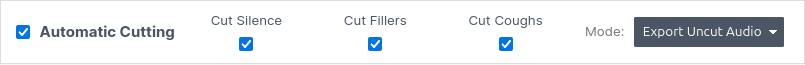

Automatic Cutting

Our automatic cutting algorithms detect and remove silent segments, filler words and respiratory noises (coughs, etc.), which occur in your audio recordings naturally. Usually, listeners do not want to hear silence segments, coughs, sneezes and a lot of filler words. Hence, cutting out these informationless sections is important to achieve a high-quality listening experience.

To additionally download all cut input tracks, choose the “Individual Track” format for “Output Files” (per default, the audio output is only the cut master track).

To control whether an audio segments are cut or not, you can choose between different Cut Modes:

Apply Cuts: This mode removes all cut segments from the audio.

Set Cuts To Silence: This mode fades cut segments to silence instead of cutting them.

Export Uncut Audio: This mode detects and reports cut segments for further use but does not apply cuts. Once processing is done, you can download the auto-generated “Cut Lists” in various formats and import them into your favorite audio or video editor to review and apply the cuts there.

For manual fine-tuning of individual auto-generated cuts, you can also use our Auphonic Audio Inspector to adjust cut positions, deactivate existing or add entirely new cut regions.

When we cut your audio, we make sure that all chapter marks and speech recognition transcripts are adapted accordingly.

IMPORTANT: For video files we suggest using the “Export Uncut Audio” mode with Cut List export! The default “Apply Cuts” mode cuts the audio and deactivates the video output!

Listen to Automatic Cutting Audio Examples:

https://auphonic.com/features/automatic-cutting

Cut Silence

A few seconds of silence quickly arise due to short speech breaks, breath pauses, or at the beginning, when the recording equipment has to be adjusted. The silence cutting algorithm reliably detects and cuts silent segments in your audio recordings while making sure, that intended speech breaks, e.g. between two sentences, remain untouched.

For more details, please also see our blog post on Automatic Silence Cutting.

Cut Fillers

We have trained our filler word cutting algorithm to remove filler words, namely any kind of “uhm”, “uh”, “mh”, “ähm”, “euh”, “eh”, and similar with English, German, and Romance language data. However, the algorithm already works quite well for many similar-sounding languages, and we will also train more languages if necessary.

For multitrack productions, fillers are cut out when only the one filler word containing track is active.

If another speaker is talking while the filler word occurs in one track, the filler is not cut but set to silence to avoid content loss in other tracks.

NOTE: We recommend to use filler cutting always in combination with our Dynamic Denoiser or Speech Isolation algorithms. This is important to remove any reverb or noise, that might cause artifacts during cutting.

For more details, please also see our blog post on Automatic Filler Cutting.

Cut Coughs

If you or your guests are not feeling their best, our cough cutting algorithm can automatically remove respiratory noises, like coughing, throat-clearing, sneezing and similar sounds from your audio — keeping your speech recordings clean, professional, and distraction-free.

NOTE: We recommend to use cough cutting always in combination with our Dynamic Denoiser or Speech Isolation algorithms with Remove Breaths enabled. The distinction between heavy breaths and mild cold symptoms is not always clear-cut and this way all respiratory noises will be removed.

For more details, please also see our blog post on Automatic Cough Cutting.

Cut Music

If your project contains foreground music that you want removed — such as intros, outros, or musical interludes — our music-cutting algorithm can automatically detect and cut all music segments, removing those parts entirely and keeping your speech recordings clean and focused.

Please note that the music cutting algorithm is tuned for music segments longer than about 20 seconds. Short segments lasting only a few seconds will not be cut. This is intentional to ensure that only music is removed while all speech remains fully preserved.

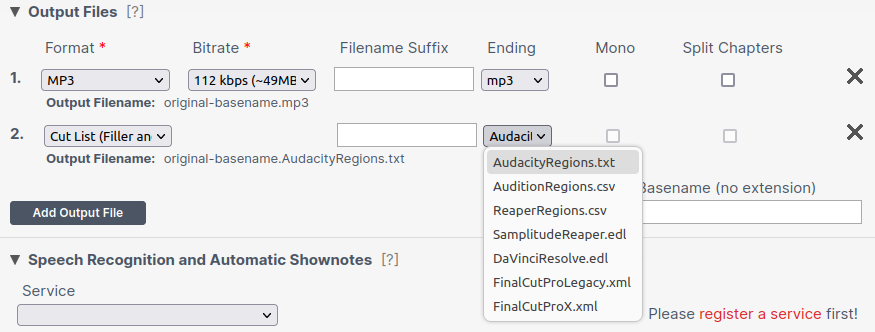

Export Cut Lists

You can add the “Cut List (Filler and Silence)” output in various formats to your Output Files. You can do this independently of the Cut Mode you selected.

The Cut List files will always be relative to your input files! Consider this when you import cut lists to your favourite audio or video editor.

Exporting cut lists allows you to import cuts into your favorite audio/video editor to review and apply the cuts manually. To generate the lists, choose “Cut List (Filler and Silence)” in the “Output Files” section. By selecting the correct “Ending” you can export the cut lists in a format that is suitable for your post production audio or video editor.

In multitrack productions, cut lists contain all cuts for the master track. To get the cut versions of all input tracks, select “Individual Tracks” as “Output File”.

We support region and EDL (edit decision list) formats:

Region formats:

“AudacityRegions.txt” – for Audacity import

“AuditionRegions.csv” – for Audition import

“ReaperRegions.csv” – for Reaper import

Region formats list filler and silence cut regions with their respective start and end timestamps.

You can import those cut region lists into your existing ‘Audacity’, ‘Audition’, or ‘Reaper’ projects. There you can edit the cut positions to your liking by deleting, moving, or adding individual cut positions. After that, you may apply the cuts manually.EDL formats (Edit Decision List):

“SamplitudeReaper.edl” – for Samplitude and Reaper import

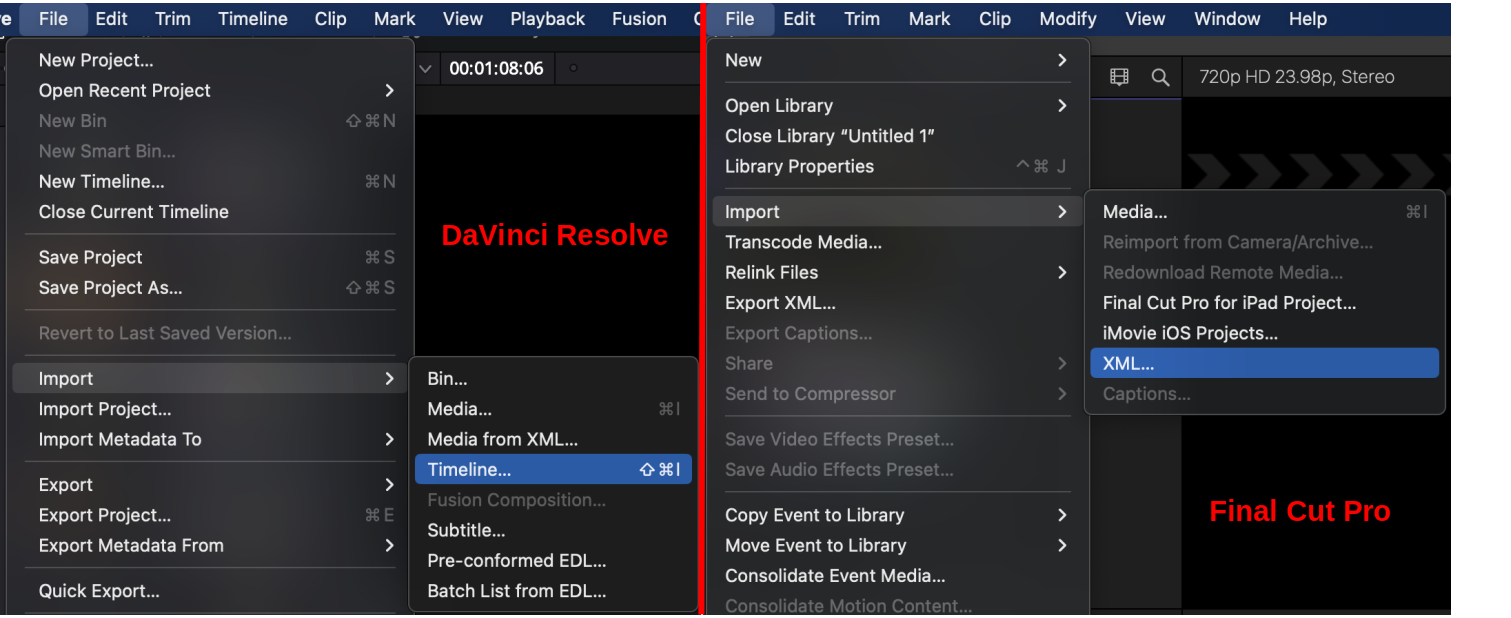

“DaVinciResolve.edl” – for DaVinci Resolve import (also known as “cmx3600” format and compatible with various editors)

“FinalCutProX.fcpxml” – for Final Cut Pro X import

“FinalCutProLegacy.xml” – for Final Cut Pro 7 (and previous versions) import

EDL formats can be imported in your software to automatically apply cuts from the EDL file. Depending on the software you use, you may also edit individual cuts manually before applying them. Be aware though that in some editors it may not be possible to add them to an existing project. If this is the case, simply open a new project with the EDL file instead.

NOTE: The cut lists will contain all cuts detected by the enabled Automatic Cutting Algorithms. If neither “Cut Fillers” nor “Cut Silence” is selected, the cut list will be empty!

For more details, please also see our blog post on Cut Lists Export.

Cut Videos

You can use the cut list files to cut video files. Just head to your favourite video editor and import the cut list file as a new timeline. This will automatically place and cut your video clip onto a newly created timeline.

For this to work you need to select either the “Set Cuts To Silence” mode or the “Export Uncut Audio” mode in Automatic Cutting. We strongly suggest the “Set Cuts To Silence” mode, as it will avoid audio artifacts.

Also, make sure though that your video file is in the same directory as your cut list file! Otherwise, your video editor won’t be able to locate your video file. Additionally, DaVinci Resolve now requires you to first import the video file (e.g. myvideo.mp4) to the media pool before importing the timeline.

The following screenshot shows how to create a new timeline with your cut list file in DaVinci Resolve (left) and Final Cut Pro (right). Just click on the menu entry which is shown in the screenshot and select your cut list file. Don’t forget to first import the video file in DaVinci Resolve though.